The future of Digital Compositing

- Aug 5, 2025

- 4 min read

Updated: Aug 9, 2025

Where are we headed?

Digital compositing has always been moving forward. Long before Méliès or Chomón, people were already trying to create impossible visuals. The technology has evolved a lot — from analog to digital — but the basic concept remains the same: stacking different elements together to look like they’re all part of one seamless shot.

Making that composition believable can often be tricky. But over the years, more and more tools have been built to help artists pull off what used to seem impossible.

Roto, keying, and color grading are still core tools in every compositor’s toolkit. And in recent years, things like 3D tracking have had a huge impact on how VFX is done — both in 2D and 3D workflows.

But what’s coming next? What new tools are just around the corner? Where is digital compositing heading?

What comes next?

In this post, I want to share a few predictions. Some might turn out to be true, some might not — time will tell.

I won’t be covering everything here. For example, real-time 3D integration, advances in render engines, or the growing number of free tools shared online — all of these are pushing the industry forward at an incredible pace.

But there are a few major trends that I think will have a big impact on how compositing is done in the near future.

↪ Shifting to procedural workflows

Speed and efficiency are key focus areas in current R&D. Moore’s Law states that computing power grows rapidly, allowing developers to build more powerful tools that leverage both CPU and GPU performance.

As things get faster, we’ll see more complex algorithms handling repetitive tasks automatically. That includes things like tracking and roto — tasks that still take up a lot of time today.

Soon, 'smart' compositing software, likely powered by AI and deep learning, will become the norm. I’m not even going to get into quantum computing here, but just know that if big studios ever get their hands on one, it could change everything.

↪ Real-time rendering is becoming a reality

A few years ago, real-time rendering felt out of reach, even on high-end machines. But now, thanks to huge improvements in graphics tech (especially in gaming), we’re starting to see what’s possible.

Plugins like Element 3D have already made a big difference for motion designers, allowing them to work in 3D with instant feedback. It’s still a relatively new technology, but it’s clear that in a few more years, it could become a game-changer for the VFX industry too.

The idea here is to remove the middle step between 3D and compositing. That way, shots can move back and forth between departments more easily. If a director wants a change, there’s no need to re-render everything from scratch.

Of course, high-end studios probably won’t switch to this kind of GPU workflow right away — it’ll take time for it to reach the same level of realism as raytracing. But small studios and freelancers are already starting to experiment with it.

New technologies are around the corner

So far we’ve talked about improving what already exists: faster workflows, better hardware, higher resolutions. But what about new tools that could really shake things up?

One interesting area is depth cameras and depth compositing.

Okay — to be fair, this isn’t exactly brand new. Depth-sensing tech has been around for a few years. But it hasn’t been fully adopted into VFX pipelines yet.

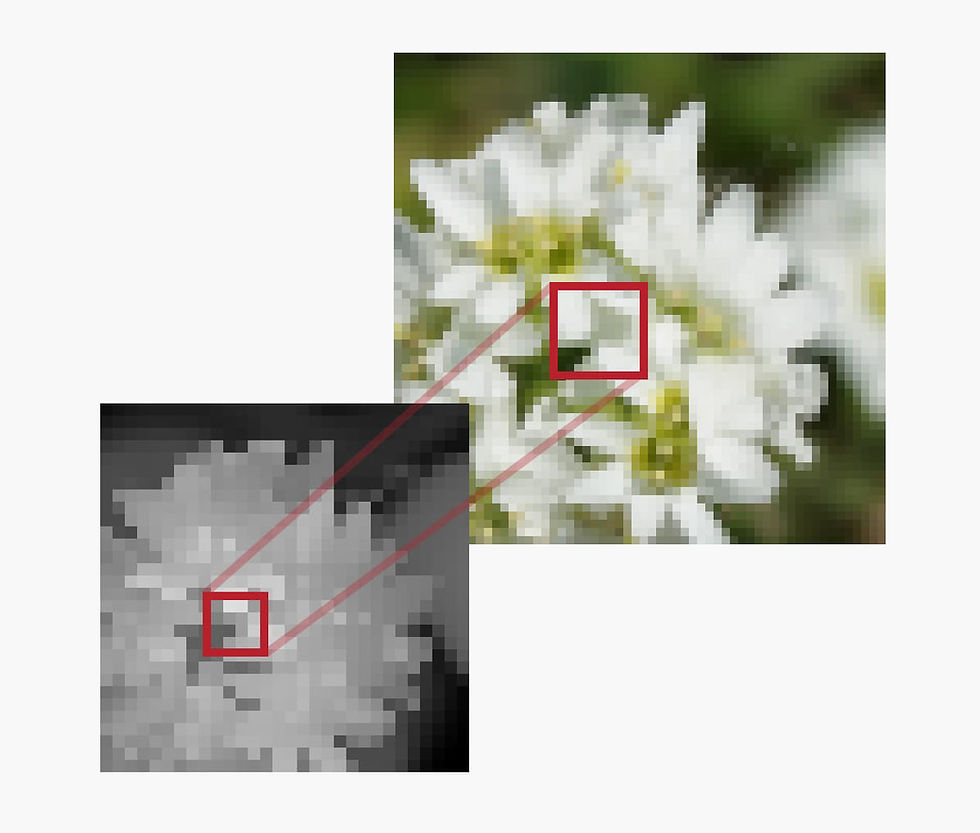

Here’s the idea: normally, cameras capture images using RGB color channels. With depth cameras, you’d add a fourth channel: Z, which stores distance information. That can be done using infrared or light field sensors.

There’s still no clear standard — maybe we’ll get a new RGBZ video format (not supported in tools like Nuke yet), or maybe the Z data will be a separate pass. But either way, this opens up some big possibilities.

↪ Why Depth matters?

We started with luminance mattes. Then came difference mattes and chroma keys. The next big thing? Depth mattes.

Why are they exciting?

Because with depth data, you could:

Extract a subject from a live-action shot without roto or green screens

Relight scenes in post without rebuilding everything in 3D

Add depth of field, fog, or volumetric effects more easily

Do better stereo conversions

Even integrate 3D scans directly into your comp

The creative possibilities here are huge.

Compared to real-time rendering (which is still growing in small studios), depth compositing might move faster into the big-budget world — assuming the camera tech improves fast enough.

Studios like ILM or MPC might be early adopters. Just like motion capture started small and now it’s everywhere, this could follow a similar path — only quicker.

The current limitations

Right now, this tech isn’t perfect.

Infrared sensors struggle outdoors — sunlight interferes with them.

If you use multiple depth cameras, they need to be perfectly calibrated or they’ll clash.

Depth data only works well at certain distances — too close or too far causes issues.

And the resolution of depth sensors is usually much lower than RGB sensors.

But all of these problems can be improved with time and better technology.

One potential fix? Using RGB edge data to “sharpen” the depth pass. By slicing each Z pixel into 4 or 8 smaller ones based on the RGB image, the two channels would align more accurately — improving matte quality and making compositing much smoother.

Nowadays, digital compositing is evolving faster than ever. And while the core principles haven’t changed, the tools and techniques definitely have.

From smarter, AI-powered software to real-time rendering and depth-based workflows, we’re on the edge of a big shift in how we work.

Some of these changes are already happening. Others are just over the horizon. Either way, it’s a great time to stay curious, keep experimenting, and be ready to adapt.